Loom

A downloadable tool for Windows, macOS, and Linux

Buy Now$5.00 USD or more

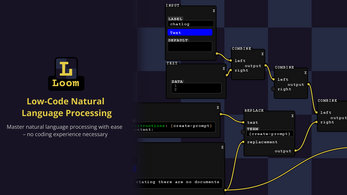

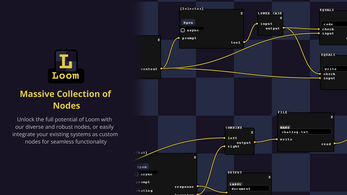

Create your own AI chatbot or natural language processing API quickly and conveniently with Loom, a low-code graphical IDE (Integrated Development Environment) that lets you leverage the power of OpenAI's GPT-3 and CoHere AI.

Features:

+ Support for OpenAI and CoHereAI's GPT APIs

+ Low code modular design

+ Built-in web server to easily create NLP APIs without any coding knowledge.

+ Support for Java style scripting via Groovy

Read the Getting Started post for a quick tutorial.

Purchase

Buy Now$5.00 USD or more

In order to download this tool you must purchase it at or above the minimum price of $5 USD. You will get access to the following files:

loom.jar 65 MB

Development log

- Loom Full ReleaseApr 21, 2023

- Loom Alpha 6.0Feb 07, 2022

- Loom Alpha 4.0 - UX Updates and More NodesNov 15, 2021

- Loom Alpha 3.0 - Codex Support, Chat-Logs and UI UpgradesOct 18, 2021

- Loom Alpha 2.0 - ChangelogJul 08, 2021

- Loom Check-In: Code NodesJul 05, 2021

- Loom - Getting StartedJun 13, 2021

Comments

Log in with itch.io to leave a comment.

Are updates actually being pushed to itch for this program or just patreon?

The current itch.io version is the same as the patreon atm

Thank you for the reply! All the best for the new year etc.

Hi there,

Can you let me know how to get loom to pick up the local install of gpt4all I'm getting a blank output. Do I set a path variable?

gpt4all looks into the models folders that is created in the same directory as loom.jar at runtime. just put your models in there.

Thank you! Awesome job!

Hey this looks really cool!

Probably a noob question but is there an install guide? After looking through WinRaR files for a while I couldn't find an installer, and I'm not sure how to get started.

The only thing you need is Java. You can get java here: Download Java for Windows. Once you install java, double click on loom.jar and it should work

Nice thanks!

Hey there, hope you don't mind me asking yet another question (thanks for your answers so far!). No rush to get back to me.

Is it possible to send the output from one node to multiple nodes? Like, let's say I want to take a text input and send it to two different classifier prompts -- is that possible? I can try and find a workaround if not.

Thanks!!

Hey sorry I'm just seeing this, you can connect output terminals to any number of input terminals. However, input terminals can only have one connection. Nodes execute after they get all the information they need. Some nodes execute in parallel with others (like the gpt3 node and the code node is "async" is checked) while others dont need the extra thread so they execute in series by order of whichever was ready first. Hope this helps

Also, sorry, one more question -- are you planning to add davinci-text-002?

Thanks!

Yes I am currently testing a build with all of the new engines

Right on! Looking forward to it.

Hey there, just downloaded and getting started. Looks cool! One question:

Is it possible to get text to wrap? I know I can break it up into lines, but will that be weird with how the text gets fed into the API?

So i debated adding auto wrap and I decided against it because I wanted the user to have complete control over every character. Though I will add it to code nodes eventually.

Ok cool. I get it, good call.

hey bruv, love this concept. any chance to change the model from gpt3 to a diff?

I'm looking into adding more models in the future. Especially as new apis get created. I've already implemented support for CohereAI's api. It's already available in the build on my patreon

You are a magical wizard and a blessing to laymen. I hope you plan on adding Davinci-Instruct as its really good for a lot of prompts. I wish i could help!

After using: Fantastic.

I have made sooo much progress with this since I have first used it and it has gotten better with usage and comprehension. Very excited for API/REST nodes. This is the gateway to web apps for both laymen and professionals.

Merry christmas! I just released the build with the http nodes. You can read about it on the newly launched patreon: Loom Alpha 5.0 - HTTP requests, accessibility options, and Patreon launch | Kingroka on Patreon